“The culture that’s going to survive in the future is the culture you can carry around in your head.”—Nam June Paik, as described by Arthur Jafa1

I like to think that I could pick my friends out of a line-up. I assume that I know their faces well enough. But I am alarmed, when I focus on images of their faces too closely, at how quickly they can become unreadable. Kundera wrote, “We ponder the infinitude of the stars but are unconcerned about the infinitude our papa has within him,” which is a beautiful but roundabout way of saying that those you love can become strange in an instant.2

A canyon opens up in this moment of strangeness, between their facial expressions, like sigils, and the meanings I project onto them. As I try to map out why I know they mean what I think they do, their faces turn back to some early first state, bristling with ciphers and omens. Their words become polysemous, generating a thousand possible interpretations.

Each time we face a new person, an elegant relational process unfolds in which we learn to read the other’s face to trust they are human, like us. A relaxed smile, soft eyes, an inviting smirk combine in a subtle arrangement to signal a safe person driven by a mind much like one’s own. Messy alchemists, we compress massive amounts of visual data, flow between our blind spots and projections and theirs to create enough of an objective reality to move forward.

Early hominids mapped the complex signs transpiring on surrounding faces to discern intention, orientation, and mood. Their relational dynamics helped develop them into linguistic beings so bound and made through language that the first embodied act of face-reading now seems to belong to the realm of the preconscious and prelinguistic. And social rituals developed in turn for people to help others read them, to signal transparency—that one’s mind is briefly, completely accessible to another.

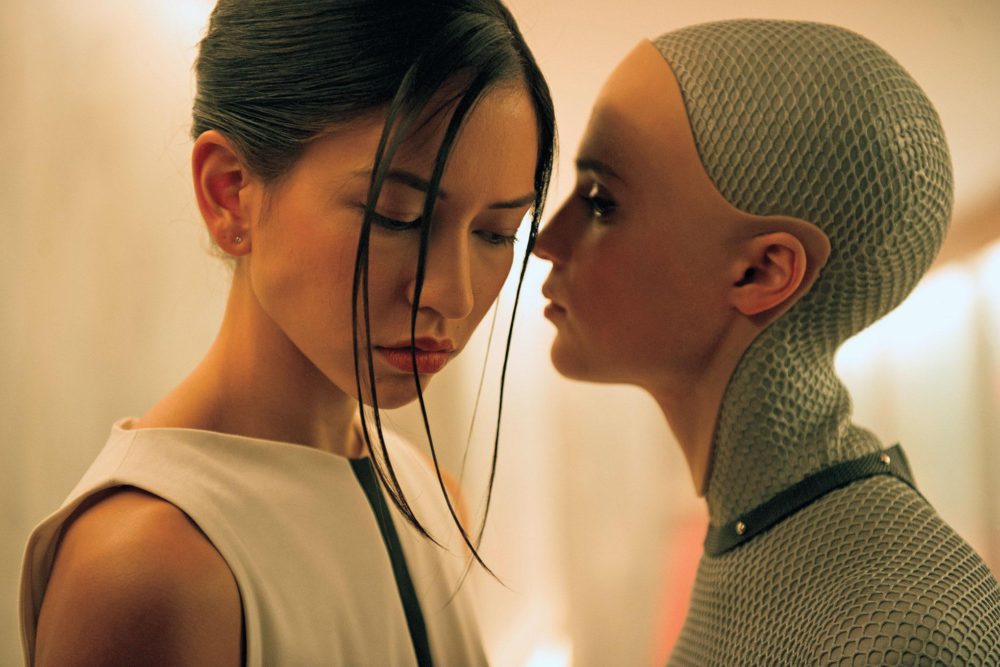

Out on this abstract semiotic landscape, humans stagger through encounters with other species and nonhuman intelligences, trying to parse their obscure intentions with the same cognitive tools. Emerging artificial intelligences can be thought of as having a face, as well—one that presents as human-like, human-sympathetic, humanoid. Though animals also listen to us and mirror us, artificial intelligences are more formidable, using complex data analytics, powerful visual and sound surveillance fueled by massive computing power, to track and map your inner thoughts and desires, present and future.

Further, global computational culture plays on the very vulnerabilities in humans’ face- and mind-reading, the processes that helps us discern intention, trustworthiness. The computational ‘face’ composes attitudes and postures, seeming openness and directness, conveyed through its highly designed interfaces, artificial languages, and artificial relationality.

Simulating the feeling of access to the machine’s ‘mind’ sates the human brain’s relentless search for a mirroring, for proof of a kind of mind in every intelligent-seeming system that twitches on its radar. Artificial intelligence is relentlessly anthropomorphized by its designers to simulate the experience of access to a kind of caring mind, a wet nurse that cares for us despite our knowing better.

Computers, technological devices, platforms, and networks are habitually, now, the faces of powerful social engineering, the efforts of invested groups to influence society’s behavior in a lasting way. The designed illusion of blankness and neutrality is so complete that users “fill in” the blank with a mind that has ethics and integrity resembling a person’s, much as they might with a new person. B.J. Fogg, the Stanford professor who founded captology (the study of persuasive technology and behavioral design), writes, hedging, that computers can “convey emotions [but] they cannot react to emotions, [which gives] them an unfair advantage in persuasion.”3

Stupidly, we wrap ourselves around devices with a cute aesthetic without thinking to check if it has teeth. Our collective ignorance in this relationship is profound. We spend an unprecedented amount of time in our lives being totally open and forthright with intelligent systems, beautifully designed artifacts that exercise feigned transparencies. The encounter with artificial intelligences is not equal or neutral; one side has more power, charged with the imperative that we first make ourselves perfectly readable, revealing who we are in a way that is not and could not be returned.

What beliefs do we even share with our artificial friends? What does it do to us to speak with artificial voices and engage with systems of mind designed by many stakeholders with obscure goals? What does it do to our cognitive process to engage continually with hyperbolic, manufactured affect, without reciprocity?

Misreading this metaphorical face comes at a cost. We can always walk away from people we do not fundamentally trust. The computational mind subtly, surely, binds us to it and does not let go, enforcing trust as an end-user agreement. Complicating matters, even if we learn to stop anthropomorphizing AI, we are still caught in a relationship with an intelligence that parrots and mimics our relationality with other people, and works overtime to soothe and comfort us. We have grown to need it desperately, in thrall to a phenomenally orchestrated mirror that tells us what we want to hear and shows us what we want to see.

Of course, we participate in these strange and abusive relationships with full consent because the dominant paradigm of global capitalism is abuse. But understanding exactly how these transparencies are enacted can help explain why I go on to approach interfaces with a large amount of unearned trust, desiring further, a tempered emotional reveal, an absolution and forgiveness.

Co-Evolution with Simulations

Governments and social media platforms work together to suggest a social matrix based on the data that should ostensibly prove, beyond a doubt, that faces reveal ideology, that they hold the keys to inherent qualities of identity, from intelligence to sexuality to criminality. There is an eerie analogue to phrenology, the 19th century’s fake science in which one’s traits, personality, and character were unveiled through caliper measurements of the skull.

Banal algorithmic systems offer a perverse and titillating promise that through enough pattern recognition of faces (and bodies), form can be mapped one to one to sexuality, IQ levels, and possible criminality. The inherent qualities of identities and orientations, the singular, unchangeable truth of a mind’s contents and past and future possibilities, predicted based on the space between your eyes and your nose, your gait, your hip to waist ratio, and on and on.

The stories about emerging ‘developments’ in artificial intelligence research that predicts qualities based on facial mapping read as horror. The disconnect and stupidity—as Hito Steyerl has described—of this type of design is profound.4 Computational culture that is created by a single channel, corporate-owned model is foremost couched in the imperative to describe reality through a brutal set of norms describing who people are and how they should and will act (according to libidinal needs).

Such computational culture is the front along which contemporary power shapes itself, engaging formal logics and the insights of experts in adjacent fields—cognitive psycholinguists, psychologists, critics of technology, even—to disappear extractive goals. That it works to seem rational, logical, without emotion, when it is also designed to have deep, instant emotional impact, is one of the greatest accomplishments of persuasive technology design.

Silicon Valley postures values of empathy and communication within its vast, inconceivable structure that embodies a serious “perversion and disregard for human life.”5 As Matteo Pasquinelli writes, artificial intelligence mimics individual social intelligence with the aim of control.6 His detail of sociometric AI asserts that we cannot ignore how Northern California’s technological and financial platforms create AI in favor of philosophical discussions of theory of mind alone. The philosophical debate fuels the technical design, and the technical design fuels the philosophical modeling.

Compression

As we coevolve with artificial minds wearing simulations of human faces, human-like gestures essentialized into a few discrete elements within user interface (UI) and artificial language, we might get to really know our interlocutors. The AI from this culture is slippery, a masterful mimic. It pretends to be neutral, without any embedded values. It perfectly embodies a false transparency, neutrality, and openness. The ideological framework and moral biases that are embedded are hidden behind a very convincing veneer of neutrality. A neutral, almost pleasant face that is designed not to be read as “too” human; this AI needs you to continue to be open and talk to it, and that means eschewing the difficulty, mess, and challenge of human relationships.

This lesser, everyday AI’s trick is its acting, its puppeteering of human creativity and gestures at consciousness with such skill and precision that we fool ourselves momentarily, to believe in the presence of a kind of ethical mind. The most powerful and affecting elements of relating are externalized in the mask to appeal to our solipsism. We just need a soothing and hypnotic voice, a compliment or two, and our overextended brains pop in, eager to simulate and fill in the blanks. Thousands of different artificial voices and avatars shape and guide our days like phantoms. The simulations only parrot our language to a degree, displaying an exaggerated concentrate of selected affect: care, interest, happiness, approval. In each design wave, the digital humanoid mask becomes more seamless, smoothly folded into our conversation.

How we map the brain through computer systems, our chosen artificial logic, shapes our communication and self-conception. Our relationship to computation undergirds current relations to art, to management, to education and design, to politics. How we choose to signify the mind in artificial systems directs the course of society and its future, mediated through these systems. Relationships with humanoid intelligences influence our relationships to other people, our speech, our art, our sense of possibilities, even an openness to experimentation.

Artificial intelligence and artifactual intelligence differ in many important ways, yet we continue to model them on each other. And how the artificial mind is modeled to interact is the most powerful tool technocracy has. But even knowing all of this, it is naïve to believe that simple exposure and unstitching of these logics will help us better arbitrate what kind of artificial intelligence we want to engage with.

It seems more useful to outline what these attempts at compression do to us, how computational culture’s logical operations, enacted through engineered, managed interactions, change us as we coevolve with machine intelligence. And from this mapping, we might be able to think of other models of computational culture.

Incompressibility

It is hard to find, in the human-computer relationship as outlined above, allowances for the ineffability that easily arises between people, or a sense of communing on levels that are unspoken and not easy to name. But we know that there are vast tranches of experience that cannot be coded or engineered for, in which ambiguity and multiplicity and unpredictability thrive, and understand on some level that they create environments essential for learning, holding conflicting ideas in the mind at once, and developing ethical intelligence.

We might attempt to map a few potential spaces for strangeness and unknowing in the design of the relationship between natural minds and artificial minds. We might think on how such spaces could subvert the one-two hit of computational design as it is experienced now, deploying data analytics in tandem with a ruthless mining of neurological and psychological insights on emotion.

On the level of language, the certain, seamless loop between human and computer erases or actively avoids linguistic ambiguity and ambiguity of interpretation in favor of a techno-positivist reality, in which meaning is mapped one to one with its referent for the sake of efficiency. With the artificial personality, the uncertainty that is a key quality of most new interactions is quickly filled in. There is no space for an “I don’t know,” or “Why do you say this,” or “Tell me what makes you feel this way.” A bot’s dialogue is constrained, tightened, and flattened; its interface has users clip through the interaction. So the wheel turns, tight and unsparing.

There is no single correct model of AI, but instead, many competing paradigms, frameworks, architectures. As long as AI takes a thousand different forms, so too, as Reza Negerestani writes, will the “significance of the human [lie] not in its uniqueness or in a special ontological status but in its functional decomposability and computational constructability through which the abilities of the human can be upgraded, its form transformed, its definition updated and even become susceptible to deletion”?7

How to reroute the relentless “engineering loop of logical thought,” as described in this issue of Glass Bead’s framing, in a way that strives towards intellectual and material freedom? What traits would an AI that is radical for our time, meaning not simply in service of extractive technological systems, look like? Could there exist an AI that is both ruthlessly rational and in service of the left’s project? Could our technologies run on an AI in service of creativity, that can deploy ethical and emotional intelligence that countermands the creativity, and emotional intelligence of those on the right?

There is so much discussion in art and criticism of futures and futurity without enough discussion of how a sense of a possible future is even held in the mind, the trust it takes to develop a future model with others. Believing we can move through and past oppressive systems and structures to something better than the present is a matter of shared belief.

The seamless loop between human and computer erases or actively avoids ambiguity, of language and of interpretation, in favor of a techno-positivist reality in which meaning is mapped one to one with its referent for the sake of efficiency. But we do not thrive, socially, intellectually, personally, in purely efficient relationships. Cognition is a process of emergent relating. We engage with people over time to create depth of dimensionality.

We learn better if we can create intimate networks with other minds. Over time, the quality and depth of our listening, our selective attention changes. We reflect on ourselves in relation to others, adjust our understanding of the world based on their acts and speech, and work in a separate third space been us and them to create a shared narrative with which to navigate the world.

With computer systems, we can lack an important sense of a growing relationship that will gain in dimensionality, that can generate the essential ambiguity needed for testing new knowledge and ideas. In a short, elegant essay titled “Dancing with Ambiguity,” systems biologist Pille Bunnell paints her first encounter with computational systems as a moment of total wonder and enchantment, that turned to disappointment:

I began working with simulation models in the late 1960s, using punch cards and one-day batch processing at the University of California Berkeley campus computer center. As the complexity of our computing systems grew, I like many of my colleagues, became enchanted with this new possibility of dealing with complexity. Simulation models enabled us to consider many interrelated variables and to expand our time horizon through projection of the consequences of multiple causal dynamics, that is, we could build systems. Of course, that is exactly what we did, we built systems that represented our understanding, even though we may have thought of them as mirrors of the systems we were distinguishing as such. Like others, I eventually became disenchanted with what I came to regard as a selected concatenation of linear and quasi-linear causal relations.8

Bunnell’s disappointment with the “linear and quasi-linear causal relations” is a fine description of the quandary we find ourselves in today. The “quasi-linear causal relation” describes how intelligent systems daily make decisions for us, and further yet, how character is mapped to data trails, based on consumption, taste, and online declarations.

One barrier in technology studies and rhetoric, and in non-humanist fields, is how the term poetics (and by extension, art) is taken to mean an intuitive and emotional disposition to beauty. I take poetics here to mean a mode of understanding the world through many, frequently conflicting, cognitive and metacognitive modes that work in a web with one another. Poetics are how we navigate our world and all its possible meanings, neither through logic nor emotion alone.

It is curious how the very architects of machine learning describe creative ability in explicitly computational terms. In a recent talk, artist Memo Akten translated the ideas of machine learning expert and godfather Jürgen Schmidhuber, who suggests creativity (embodied in unsupervised, freeform learning) is “fueled by our intrinsic desire to develop better compressors” mentally.9

This process apparently serves an evolutionary purpose; as “we are able to compress and predict, the better we have understood the world, and thus will be more successful in dealing with it.” In Schmidhuber’s vision, intelligent beings inherently seek to make order and systems of unfamiliar new banks of information, such that:

… What was incompressible, has now become compressible. That is subjectively interesting. The amount we improve our compressor by, is defined as how subjectively interesting we find that new information. Or in other words, subjective interestingness of information is the first derivative of its subjective beauty, and rewarded as such by our intrinsic motivation system … As we receive information from the environment via our senses, our compressor is constantly comparing the new information to predictions it’s making. If predictions match the observations, this means our compressor is doing well and no new information needs to be stored. The subjective beauty of the new information is proportional to how well we can compress it (i.e. how many bits we are saving with our compression—if it’s very complex but very familiar then that’s a high compression). We find it beautiful because that is the intrinsic reward of our intrinsic motivation system, to try to maximize compression and acknowledge familiarity.10

There is a very funny desperation to this description, as though one could not bear the idea of feeling anything without it being the result of a mappable, mathematically legible process. It assumes that compression has a certain language, a model that can be replicated. Seeing and finding beauty in the world is a programmatic process, an internal systemic reward for having refined our “compressor.”

But the fact of experience is that we find things subjectively beautiful for reasons entirely outside of matching predictions with observations. A sense of beauty might be born of delusion or total misreading, of inaccuracy or an “incorrect” modeling of the world. A sensation of sublimity, out of a totally incompressible set of factors, influences, moral convictions, aesthetic tastes.

How one feels beauty is a problem of multiple dimensions. Neuroaesthetics researchers increasingly note that brain studies do not fully capture how or why the brain responds to art as it does, though these insights are used in Cambridge Analytica-style neuromarketing and advertisements limning one’s browser. But scanning the brain gets us no closer to why we take delight in Walter Benjamin. A person might appear to be interesting or beautiful because they remind one of an ancient figure, or a time in history, or a dream of a person one might want to be like. They might be beautiful because of how they reframe the world as full of possibility, but not through any direct act, and only through presence, attitude, orientation.

Art, Limits, and Ambiguity

This is not to say we should design counter-systems that facilitate surreal and unreadable gestures—meaning, semantically indeterminate—as a mode of resistance. The political efficacy of such moves, as Suhail Malik and others have detailed, in resistance to neoliberal capitalism is spectacular and so, limited.11

New systems might, however, acknowledge unknowing; meaning, the limits of our current understanding. What I do not know about others and the world shapes me. I have to accept that there are thousands of bodies of knowledge that I have no access to. I cannot think without language and I cannot guide myself by the stars, let alone commune with spirits or understand ancient religions. People not only tolerate massive amounts of ambiguity, but they need it to learn.

Art and poetry can map such trickier sites of the artifactual mind. Artists train to harness ambiguity; they create environments in which no final answer, interpretation, or set narrative is possible. They can and do already intervene in the relationality between human and banal AI, providing strategies for respecting the ambiguous and further, fostering environments in which the unknown can be explored.12 Just as the unreadable face prompts cognitive exploration, designed spaces of unknowing allow for provisional exploration. If computational design is missing what Bunnell calls “an emotional orientation of wonder,” then art and poetry might step in to insist on how “our systemic cognition remains operational in ways that are experienced as mysterious, emergent, and creative.”13

Artists can help foreground and highlight just how much neurocomputational processes cannot capture the phenomenal experiences in which we sense our place in history, in which we intuit the significance of people, deeply feel their value and importance, have gut feelings about emerging situations. There is epigenetic trauma we have no conscious access to, that is still held in the body. There are countless factors that determine any given choice we make, outside our consumer choices, our physicality, our education, and our careers, that might come from travel, forgotten conversations, oblique readings, from innumerable psychological, intellectual, and spiritual changes that we can barely articulate to ourselves.

A true mimic of our cognition that we might respect would embed logical choices within emotional context, as we do. Such grounding of action in emotional intelligence has a profound ethical importance in our lives. Philosophers like Martha Nussbaum have built their entire corpus of thought on restoring its value in cognitive process. Emotions make other people and their thoughts valuable, and make us valuable and interesting to them. There is an ethical value to emotion that is “truly felt,” such as righteous anger and grief at injustice, at violence, at erasure of dignity.14

Further, artistic interventions can contribute to suggesting a model of artificial mind that is desperately needed: one that acknowledges futurity. Where an artificial personality does not think on tomorrow or ten years from now, that we think on ourselves living in the future is a key mark of being human. No other animal does this in the way we do.15

This sense of futurity would not emerge without imagination. To craft future scenarios, a person must imagine a future in which she is different from who she is now. She can hold that abstract scenario before her to guide decisions in the present. She can juggle competing goals, paths, and senses of self together in her mind.

Édouard Glissant insisted on the “right to opacity,” on the right to be unknowable. This strategy is essential in vulnerable communities affected by systemic asymmetries and inequalities and the burden of being overseen. Being opaque is generally the haven of the powerful, who can hide their flows and exchanges of capital while feigning transparency. For the less powerful, an engineered opacity offers up protection, of the vitality of experience that cannot be coded for.

We might give to shallow AI exactly what we are being given, matching its duplicitousness, staying flexible and evasive, in order to resist. We should learn to trust more slowly, and give our belief with much discretion. We have no obligation to be ourselves so ruthlessly. We might consider being a bit more illegible.

When the interface asks how I feel, I could refuse to say how I feel in any language it will understand. I could speak in nonsense. I could say no, in fact, I cannot remember where I was, or what experiences I have had, and no, I do not know how that relates to who I am. I was in twenty places at once; I was here and a thousand miles and years away.

I should hold on open engagement with AI until I see a computational model that values true openness—not just a simulation of openness—a model that can question feigned transparency. I want an artificial intelligence that values the uncanny and the unspeakable and the unknown. I want to see an artificial intelligence that is worthy of us, of what we could achieve collectively, one that can meet our capacity for wonder.